Please, note:

So far my "interventions" into the field of AI development have been sporadic, and the full picture comes with the reading of all major pieces on the matter: e.g.

On The Definition of AI

Relax! The real AI is Not Coming Any Soon!

Applying AI to Study Learning And Teaching Practices

Table of content

So far my "interventions" into the field of AI development have been sporadic, and the full picture comes with the reading of all major pieces on the matter: e.g.

On The Definition of AI

Relax! The real AI is Not Coming Any Soon!

Applying AI to Study Learning And Teaching Practices

Table of content

Who will train our “artificial puppies”? But more importantly – who will train the trainers? Two questions which will haunt AI developers very soon.

it continues from The Dawn of the New AI Era (the 1st piece on AI training)

What will happen to a puppy when it grows up?

The answer to this

question depends on how the puppy will be raised.

Its physical health

depends on the food and exercises his owner will be providing on the everyday

basis. But it's skills, what it will be able to do, what commands will it

understand and execute and how good it will be on doing that, depends solely on

how good will be the job the trainer will be doing when the puppy will be growing

up. The best trainers can make animals do amazing things. Dogs can sniff out drugs,

find people covered by a snow avalanche, recognize a gun and bite the hand

which holds it. Everyone who was in a circus knows that animals can do things

which even some people cannot do, for example ride a barrel.

Animal trainers pass

their training techniques from a generation to a generation

But what will happen

to a puppy if it will not be trained?

Nothing!

It will simply remain

being a puppy, but in an adult body.

Physically – an adult;

but mentally – still a puppy.

Exactly like humans who

do not get a good training, a.k.a. education, or an AI system - due to the same reason.

So, instead of

talking about animals we could talk about people.

For example, if baby Einstein

would be left in jungles with monkeys, he would become a monkey – a very smart

one, but still a monkey.

Or if a baby Einstein

remained living with people, but would not get ANY education, he would still be

very smart, but he would not be able to create his relativity theory. In fact,

he would never write a single paper. To do that he would have to invent – completely

on his own – the alphabet, the grammar, arithmetic

and algebra, trigonometry and calculus, and also rediscover all physics laws. And

even if he would be capable of doing all that, he would simply have not enough

time.

But for the purpose

of this paper we do not need to talk about people. Animals will do. Because

currently there is no single AI system which would be smarter than an animal (and

such a system is not going to be developed any soon, don't trust the hype - it is just a hype ).

What AI can do today

does not exceed what a trained dog can do, i.e. recognize certain objects (including

faces), recognize certain voice, and voice commands, and execute those commands.

Lots of effort has

been focused on developing algorithms which would allow AI to make less mistakes

when recognizing certain audio and visual (or other) patterns. To achieve the highest

possible rate of successful pattern recognition an AI algorithm should be able to correct its own mistakes (via “deep” and other types of

learning AI specialists had borrowed from educational psychology) and that – according

to AI enthusiast – makes it intelligent.

Like a toddler who learns

do not touch a hot plate, or like a puppy which learns do not bite a bee - intelligent action of deep learning.

Using our analogy between

a puppy and AI, we say that the most of the effort in AI-development field has

been channeled into creating “artificial puppies”, or AP (in a general sense - an AI system does not have to look like a puppy).

People who were

creating those AP, also were their trainers.

Of course, they

needed to know that the AP they made was trainable, or they would have to

develop a different algorithm.

However, recently the

situation in AI field has been rapidly changing.

The biggest players

in the field of AI (Google, Microsoft, and many

others) know now very well how to create an “Artificial Puppy” (of several

kinds), and can even mass produce those AI systems. Large companies like Google,

or Microsoft rent out

their AI to startups and companies exploring AI opportunities.

Using our analogy, we

say that nowadays anyone can “buy” an “Artificial

Puppy”.

Getting “a puppy” is

not a problem anymore.

The problem is how to train it properly and effectively so the “puppy” would be doing

for the owner all the “tricks” the owner wants it to do.

Well, if you get a

puppy and you want it to be well trained you have only 2 options:

1. You need to hire for your puppy a good trainer.

or

2. You have to become

a good trainer yourself.

Which actually is

just one option: you need to find a good

trainer, and then either have him/her to train your puppy, or have him/her

to train you how to train your puppy.

And that is the problem AI professionals ignore or try to

avoid to discuss (who would want to kill the hype?).

There are no many good professionals in the field of training

AI systems. The existing good AI trainers are those people who have been involved in

the process of the development of the existing AI systems. Everyone else is like a veterinarian

trying to convince us that he/she can perform a heart surgery on people (or even

a “village”/medieval doctor selling potions for every know illness).

Every day (well, maybe every other day) there is another publication predicting that soon AI will do something very amazing (including killing all humans). And those

publications are not wrong. They just greatly exaggerate the timing. Nothing significant

will happen any soon. But eventually AI will be able to do everything what a biological

intelligence spices can do (for starters, reading Isaac Asimov would help to get prepared).

Everyone who considers using AI for his/her company should

know about “porridge from an axe”

effect, described in “The

Dawn of The New AI Era” (a 6-minute read); and “On a Definition of AI” (an

8-minute read) is helpful to gain a better understanding the essence of AI (and on the essence of thinking in general).

Just keep in mind that the simplest model of any currently available

AI systems is “an artificial puppy”. If you want to have it, figure out first

what do you want from it when it "grows up" (i.e. trained and functions).

Any AI system is – yes – a system. Like a car, or a heating

system in a house.

There are people who design and develop it, people who install

and maintain it, people who write the manual, and people who use it, a.k.a.

users or customers.

If you are a user of AI you should know what you want to use

it for, and learn how to use it.

If you want to use a car you do not need to know anything

about internal combustion, or a torque and gear rotation. You need to learn how to operate

it and keep it in a working condition. For that you have a driving school, or a

friend, or a parent (or, if you believe in your good luck, you can try the

oldest scientific method – trial and error), and a manual.

But there is a difference between using a car and AI.

You do not have to train a car how to drive, it has been

built that way.

To use AI you have to train it to work the way you need it.

You need to know WHAT do you need from your AI, what does your AI need to do for you. And you have to teach your AI to do it properly. And you need to know what does "properly" mean for you.

And if you cannot do it, and a trainer is not good,

your AI

will be useless for you.

The fact that in order to be useful AI must be properly

trained is hidden between the lines in publications saying, paraphrasing, that “it

is not just about the amount of data, it is about how the data are structured”.

And who will be structuring your data for you? AI professionals are not experts

in your field, they are experts in developing pattern recognition systems, but

that’s that. And even if AI will recognize some patterns in your data, how

would you know that those patterns are at the core of your company’s

functioning? For example, for a long time based on the visible patterns people

thought that the Earth was flat and the Sun was moving about it.

Those are just a few of the important questions to everyone

who is considering to use AI for their business.

AI developers will NOT be able to answer those questions.

Professionals in knowledge production, science development,

and experts

in human intelligence WILL.

In order to make a transition from an academic study to a mass

use of AI, the AI field needs a new type of professionals, who will be able to

bridge AI developers and consumers; professionals, who need to be able to:

1. install a specific AI system as a part of the company’s workflow

(but do not need to know how to design it);

2. train a specific AI system;

3. elicit from the customer the vision of the goals and

criteria set for the proper functioning of a specific AI system;

4. design the proper process and structure for collecting

appropriate data;

5. train the customer in using a specific AI system.

Such professionals do not need to be proficient in coding, or

in AI development in general.

The core abilities of such a professional are an ability to analyze,

and an ability to teach (those abilities are necessary, but not sufficient).

The difference between a good “AI training representative”

and a bad one is very similar to the difference between a good teacher and a

bad one.

When in action ("in a class in front of students") they look very similar, but the results are very different.

AI developers need to:

1. begin a search for that type of people, and

2. begin designing the new profession.

The sooner the new profession will become a professional

field, the sooner and broader will AI be adopted in all areas of economy.

Top AI developers/promoters reported to the Congress that AHLI will not be achieved at least for 20 more years. Despite the fact that the need for professional AI trainers will grow much faster than advances in the development of human level AI, professionals in AI field do not pay as much attention to AI training as to, say, ethics.

For everyone who is struggling to figure out what AI could do for his/her business, I can help with this simple approach. Imagine you can hire as many interns as you want. They don't know much, but you can train them to do some simple but tedious job. Imagine how would you train those interns to do that job. Here it is - this job can be done by AI trained as an intern. You're welcome!

And finally, a word to investors. AI investors need to be careful when considering handing their money to a new startup which has "AI" in its title. Recently "blockchain" has become a buzzword. A company can change its name by including "blockchain" in it, and investors are ready to put in it their money even if the actual business run by the company has nothing to do with using a blockchain technology (but may "explore an opportunity to use it", like "Long Island Iced Tea").

This just demonstrates how far AI yet from actual intelligence (although, such AI can be developed).

Top AI developers/promoters reported to the Congress that AHLI will not be achieved at least for 20 more years. Despite the fact that the need for professional AI trainers will grow much faster than advances in the development of human level AI, professionals in AI field do not pay as much attention to AI training as to, say, ethics.

For everyone who is struggling to figure out what AI could do for his/her business, I can help with this simple approach. Imagine you can hire as many interns as you want. They don't know much, but you can train them to do some simple but tedious job. Imagine how would you train those interns to do that job. Here it is - this job can be done by AI trained as an intern. You're welcome!

And finally, a word to investors. AI investors need to be careful when considering handing their money to a new startup which has "AI" in its title. Recently "blockchain" has become a buzzword. A company can change its name by including "blockchain" in it, and investors are ready to put in it their money even if the actual business run by the company has nothing to do with using a blockchain technology (but may "explore an opportunity to use it", like "Long Island Iced Tea").

(from Barron's, March 19,2018)

A similar phenomenon seems happening with "AI". The best way to make a decision about an investment would be to hire an expert in AI who can audit the startup and clearly state: is AI indeed at the core of the company's business, how much of the company's workflow is relied on the use of AI technologies, could AI be replaced with a more traditional technical solution?

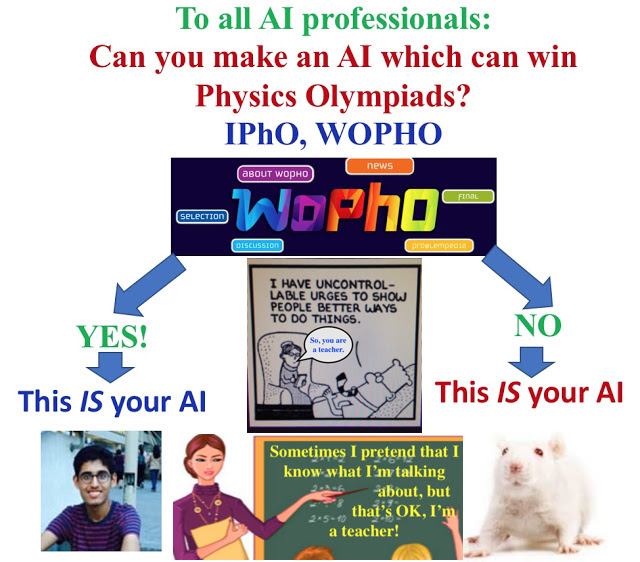

BTW: to my best knowledge, so far, no large company or a small startup takes on the challenge of developing AI which would be able to win a Physics Olympiad (theoretical part).

Thank you for visiting,

Dr.

Valentin Voroshilov

Education

Advancement Professionals

To learn

more about my professional experience:

No comments:

Post a Comment